You’ve trained, configured, and tested your AI. You’ve set it live, people have been using it, and conversations are coming in. It is now time to review and analyze these conversations so you can improve the answers whether it means adding or updating training sources or adjusting the configuration.

Conversations list view

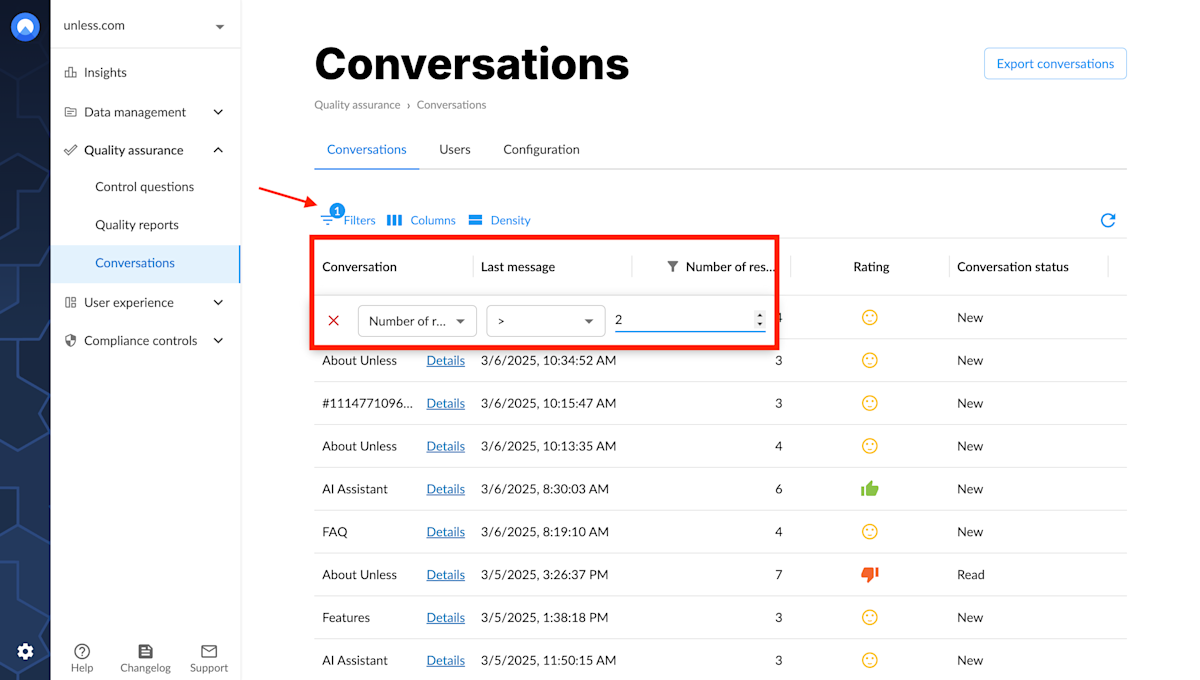

In the Conversations tab of your Unless dashboard, you will find a list view of all conversations from the last 30 days. For each conversation, you will also see the date and time of the last message, the number of responses within the conversation, and the rating it received from the user along with the conversation status.

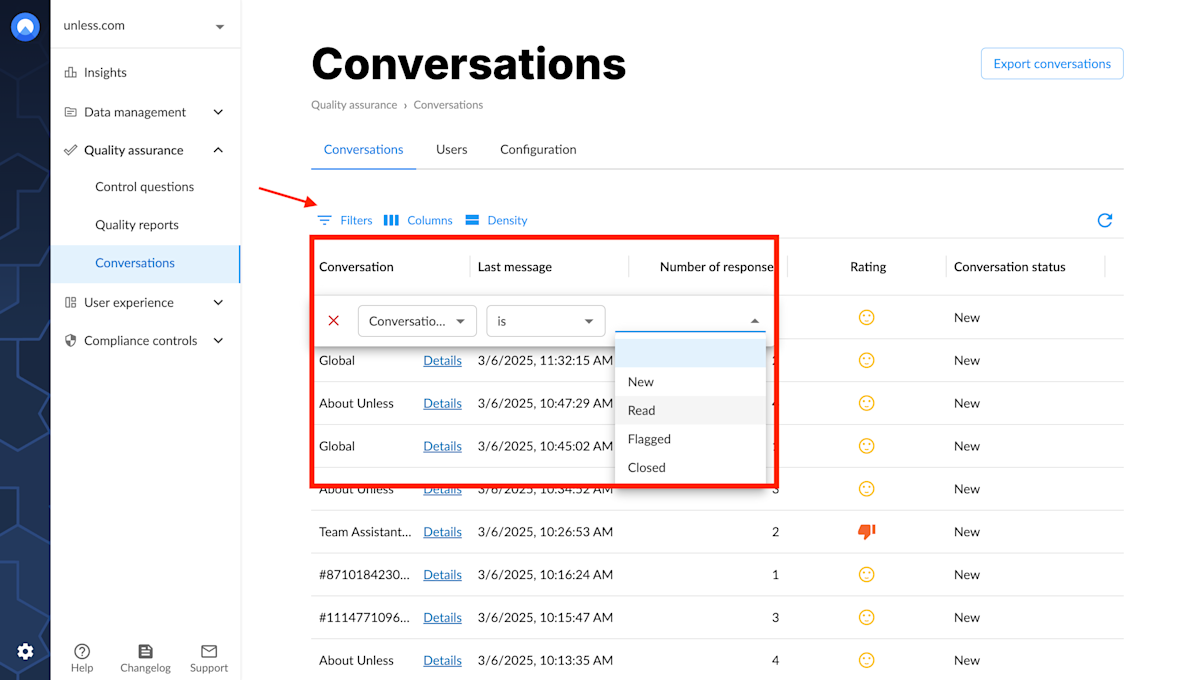

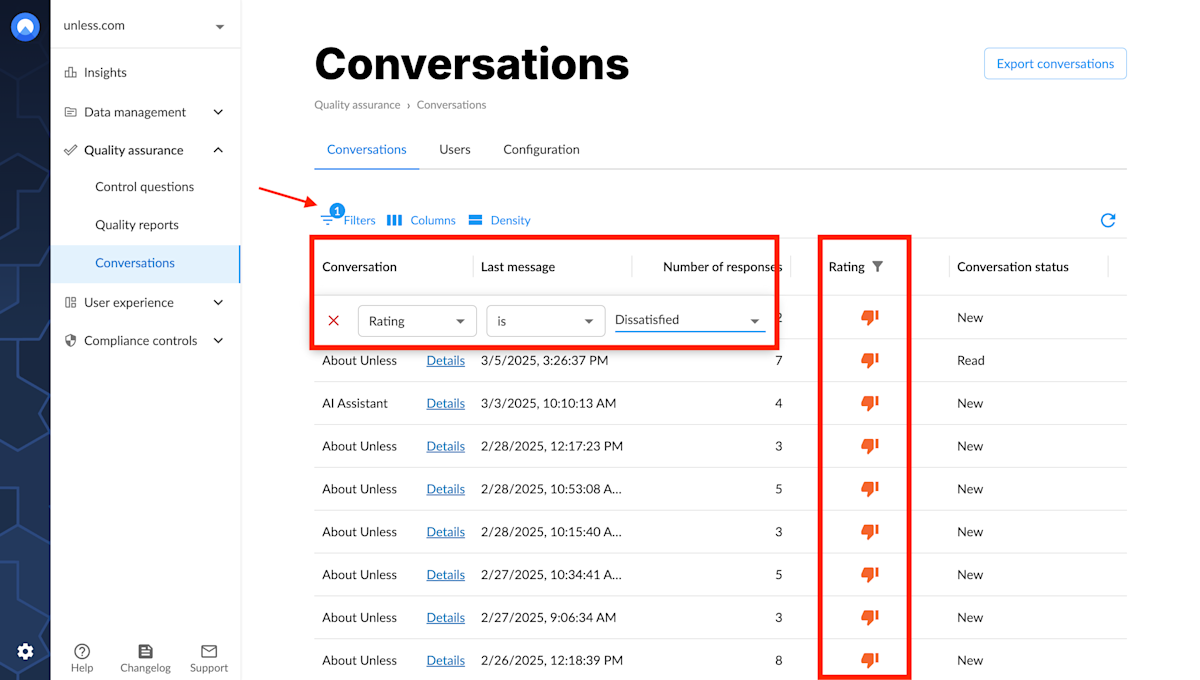

Filtering options

You can then filter the conversations by rating, number of responses, status and more to make it easier to review. Reviewing these conversations lets you know if the AI is responding in the way you would expect. It also allows you to see if you need to add more training sources or update (or remove) existing ones.

Filtering and reviewing these conversations can provide insight into what your users and customers are asking and what their needs are. This can inform the content you create in the future as well as any product decisions you make whether it be additional articles, FAQs, user guides, onboarding procedures, and more.

Tip: Longer conversations tend to provide more insight so we recommend filtering for at least 2 or more responses though you could always opt for a higher number too.

Conversation details page

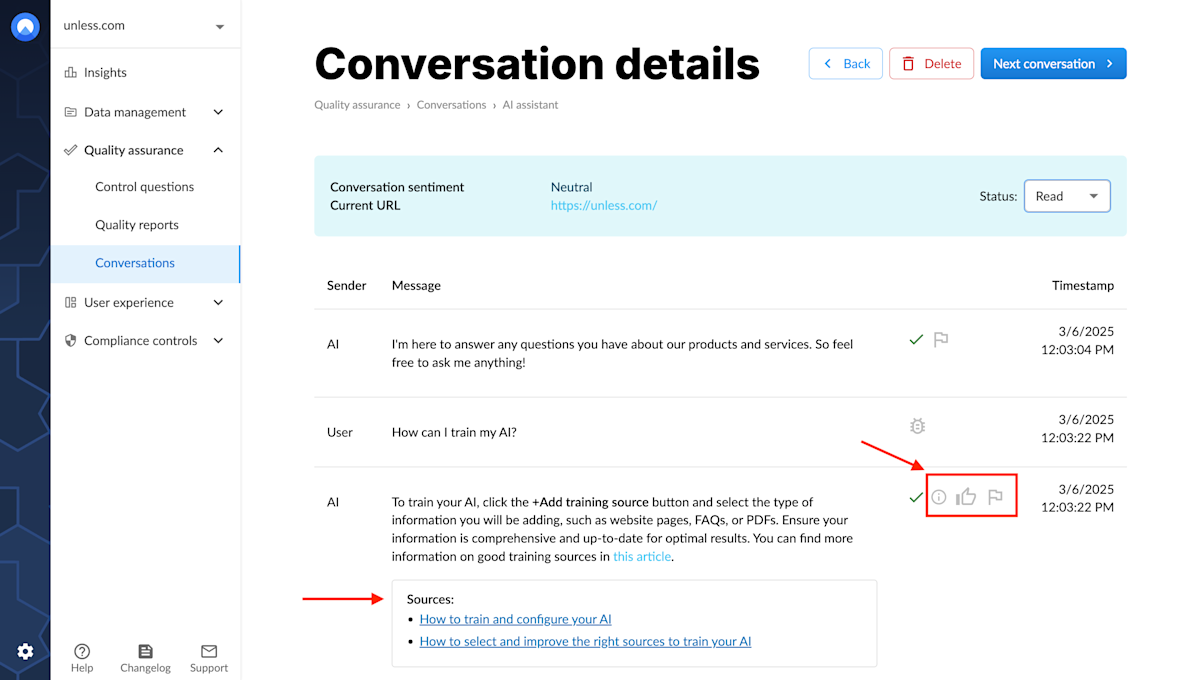

Clicking Details for a conversation will bring you to the details view which consists of all messages within that conversation along with any thumbs up/down ratings attached to the responses.

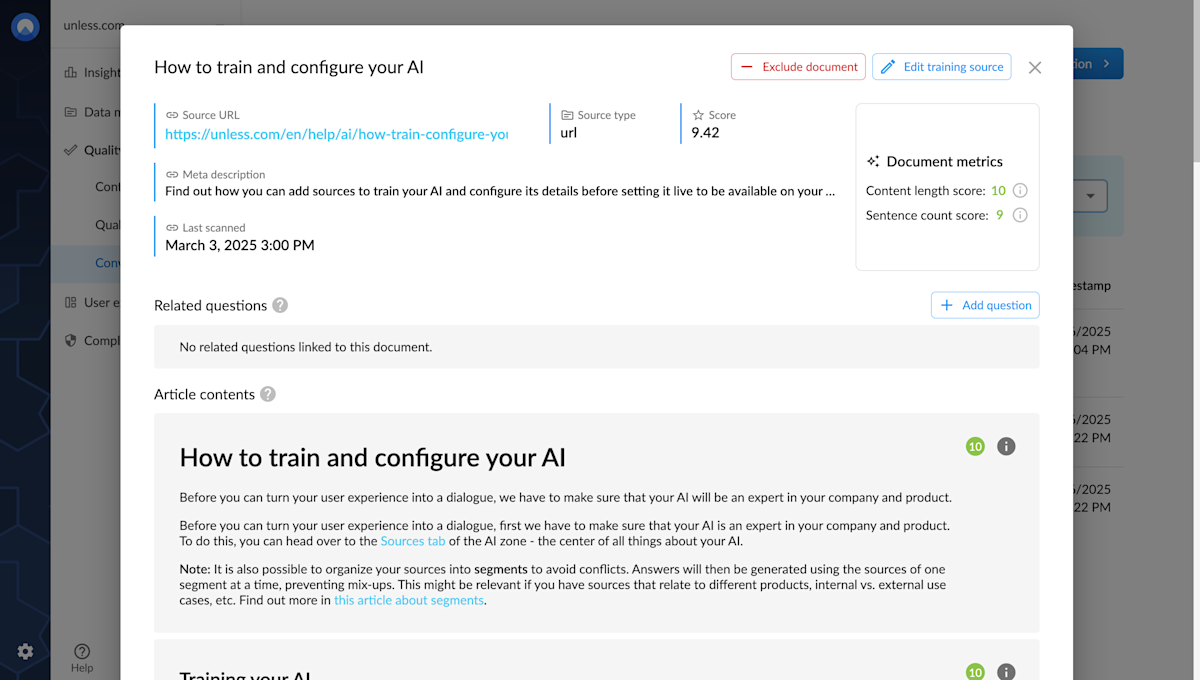

Viewing a source

It is possible to take a quick glance at the sources used for a response. And you can click each listed source to open them up and review the content within that source. If there are any inconsistencies between the AI answer and what you’d expect, this is a quick way to troubleshoot. Any wrong or old information in a source can be removed and updated versions can be added.

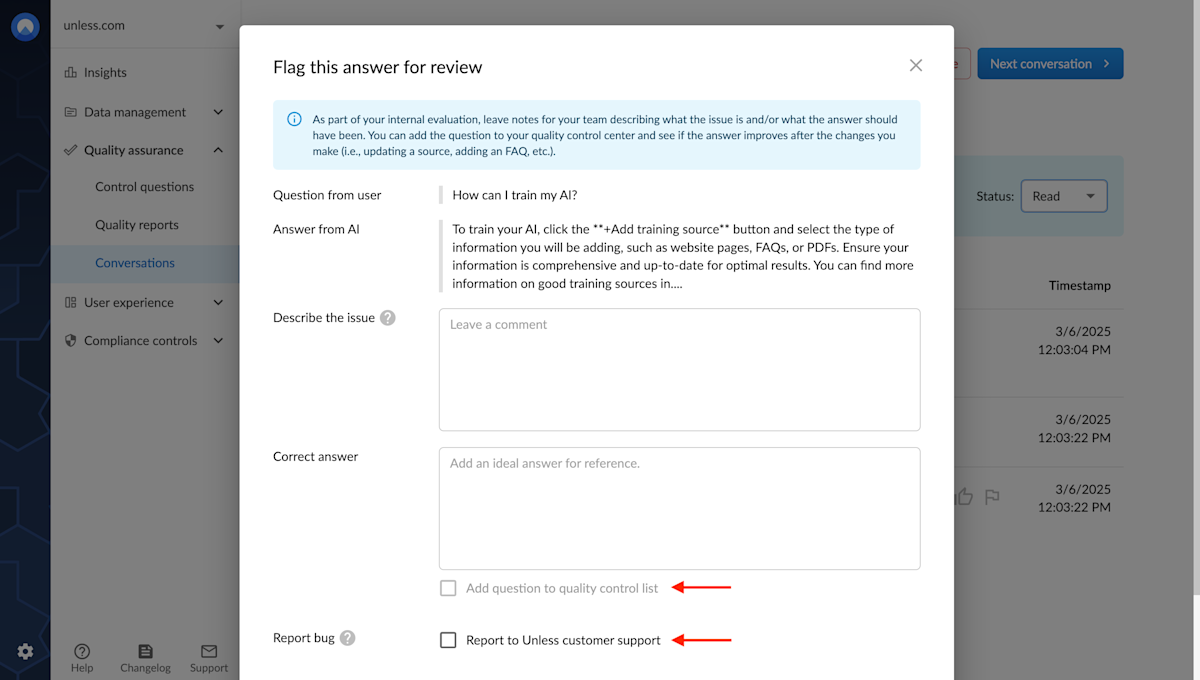

Flagging a response

You can flag a repsonse as part of your internal evaluation or if you believe there’s a technical bug, you can check off the box to report it to the Unless team. This way, when analyzing conversations, you can flag responses and leave notes for your colleagues describing what the issue is and/or what the answer should have been.

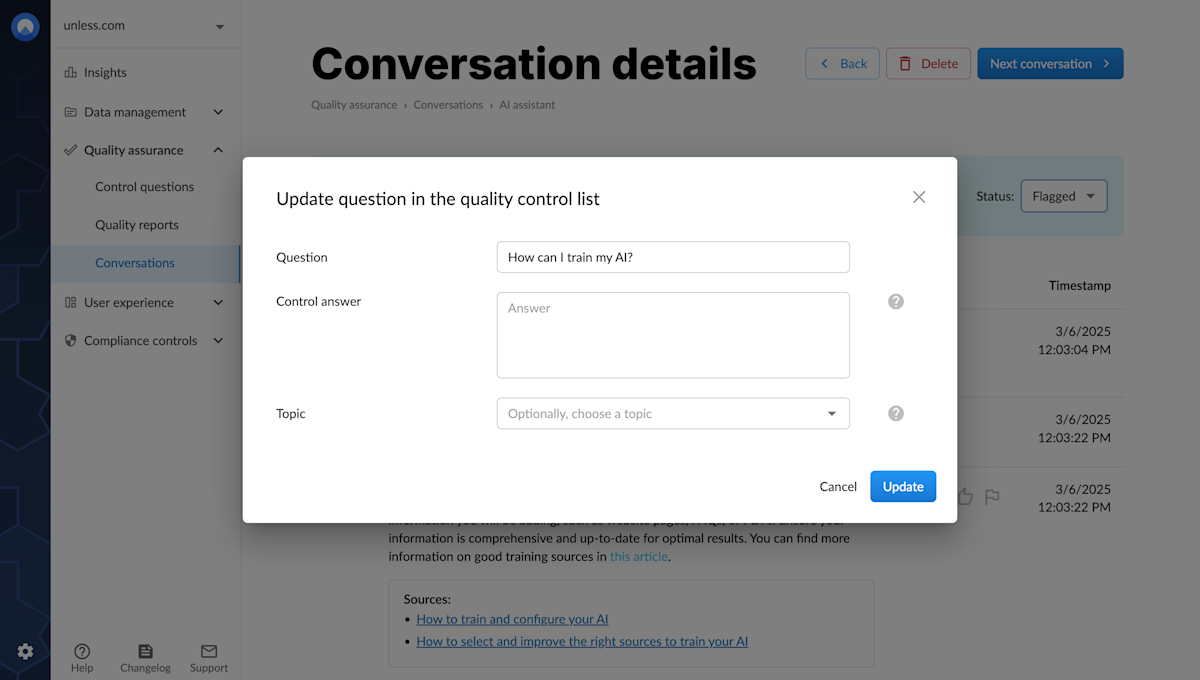

At this stage, you can also add the question to your Quality control questions. Don't worry if the question isn't how you would structure it, once you click save, you will have the possibility to edit the question and the control answer.

When training and configuring your AI, make sure to also take a look at the quality control center. You can read more about that in this article.